Thought To Speech: A Paralyzed Man Finds His Voice Again

- Jia Chun

- Jul 7, 2025

- 3 min read

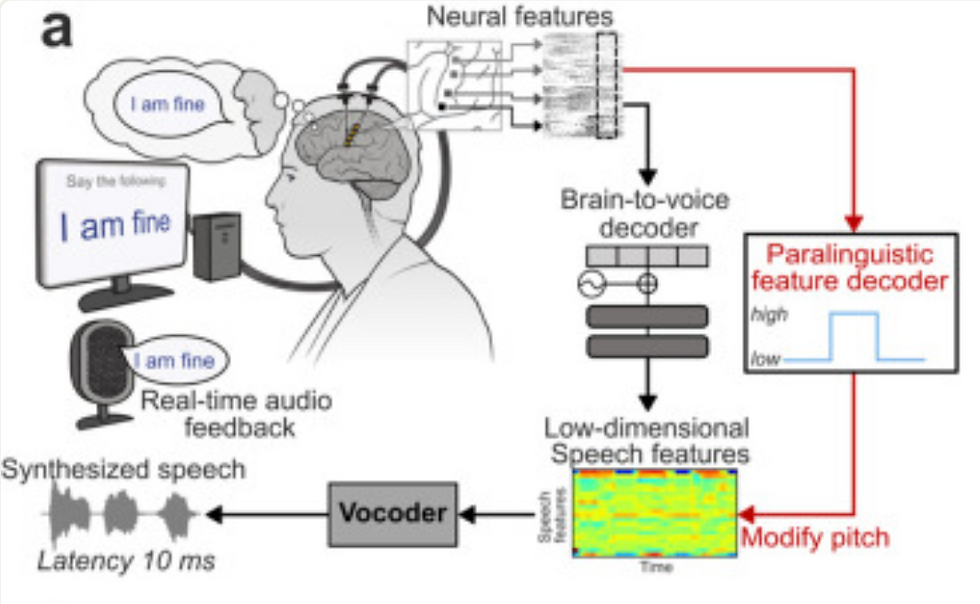

A study, published in the scientific journal Nature on June 11th, 2025, revealed a one-of-a-kind technology that allowed a paralyzed man to "speak." Brain computer interfaces (BCIs) have been typically been pursued as a therapy to restore speech by decoding neural activity. However, recently, BCIs have been used to translate neural activity into text.

Introduction

The participant who volunteered for the study was a man diagnosed with amyotrophic lateral sclerosis (ALS), a disease in which nerve cells controlling voluntary muscle movement degenerates. Additionally, he was diagnosed with severe dysarthria, a condition in which the person affected lacks control in the muscles used for speaking. He was able to vocalize, but could not speak except through his care team, who were able to interpret him.

In order to carry out the study, electrodes (1.5 mm length) were surgically placed in the left precentral gyrus. This area of the brain contains parts of the brain that initiate voluntary movements. This way, voltage from brain activity were able to be sent to an external receiver then sent to computers for neural decoding.

The participant had 10 tasks:

Trying to speak cued sentences

Miming (no vocalizing) cued sentences

Responding to open-ended questions

Spelling out words letter by letter

Attempting to speak fake words (ex: ooh, umm, ew)

Saying interjections

Cued sentences in fast / slow speeds

Speaking at a quiet / normal volume

Modulating intonation (ex: question or exclamation)

Singing melodies

Results / Discussion

In order to evaluate the effectiveness of the synthesis of real-time audio, 15 human listeners tried to match all 956 sentences spoken or thought by the volunteer to the correct transcript, with a mean accuracy of 94.34% and a median of 100% (thus showing that most listeners were able to understand all synthesized sentences). This demonstrates that real-time speech mirrors the intended speech at an extremely high success rate.

Additionally, the study attempted to test whether simply thinking the sentence would create easily interpretable synthesized speech. To do this, the team tested brain-to-voice BCI with mimed speech. The volunteer would not attempt to vocalize and only mouthed the sentence. Listeners were again tasked with matching sentences to the transcript and it resulted in a Pearson correlation coefficient of 0.89 ± 0.03.

The Pearson correlation coefficient is a statistical tool used to measure the correlation (relation) between two variables. In this case, the coefficient measures how well listeners were able to correctly understand the synthesized sentence. Therefore, listeners were able to correctly understand the sentence at a high degree, indicating that those unable to speak do not have to strain their voice in order to vocalize (the volunteer reported that the miming method was much less tiresome). Additionally, those who may lose their ability to speak are able to use this tool.

Other things to note

Accuracy of free response sentences was lower than cued sentences, possibly due to the volunteer paying less attention to enunciating words (reverting to normal way of speaking)

Though the brain-to-voice decoder did not know the fake words spoken (ew, umm, etc.), listeners were able to interpret the synthesized speech with a Pearson correlation coefficient of 0.90 ± 0.01 (very high)

The decoder was able to get question intonation across with an accuracy of 90.5% and word emphasis with an accuracy of 95.7% (very exciting!)

Results / Discussion

However, there are a few minor limitations that should be mentioned.

The participant was a single participant, therefore we are unsure if the technology works the same across a generalization of people

Participant's energy level and engagement were a clear factor in whether the synthesized voice was easy to understand

We are unsure if long-term, consistent use will provide further accuracy due to the program learning from the collection of data

However, the implications of this study are amazing. The technology allowed the synthesis of a wide variety of vocalizations, including singing, and demonstrates a brain-to-voice neuroprosthesis (an artificial device that works with the nervous system to restore or improve lost functions) can restore communication abilities.

The system could decode even loudness, pitch, and intonation, allowing the volunteer to regain a full range of expression. The participant used the speech synthesis BCI to say that being able to listen to his own voice "made me happy and it felt like my real voice."

Comments